Google just took a major step forward in the AI search wars. Search Live has been released in the U.S. after a while as an experiment for Google Labs, moving from experimental testing to broader availability and hinting at a fundamental shift in how we interact with information. This is not just another incremental update. Search Live lets users talk to an AI that can also see through their phone's camera, a multimodal experience that feels like having a knowledgeable friend in your pocket.

This technology reflects Google’s push to turn search from a string of queries into a running conversation. Picture a messy kitchen, you point your camera at a mystery gadget and just ask, What is this? No awkward keywords. No guessing the exact term. That is the kind of natural interaction Search Live aims for.

What makes Search Live different from everything else?

Here is where it gets interesting. Search Live uses what Google calls "query fan-out" to conduct its searches. The AI can explore multiple angles of your question at once, not just march down one path. Think of it like asking a panel of specialists instead of a single expert.

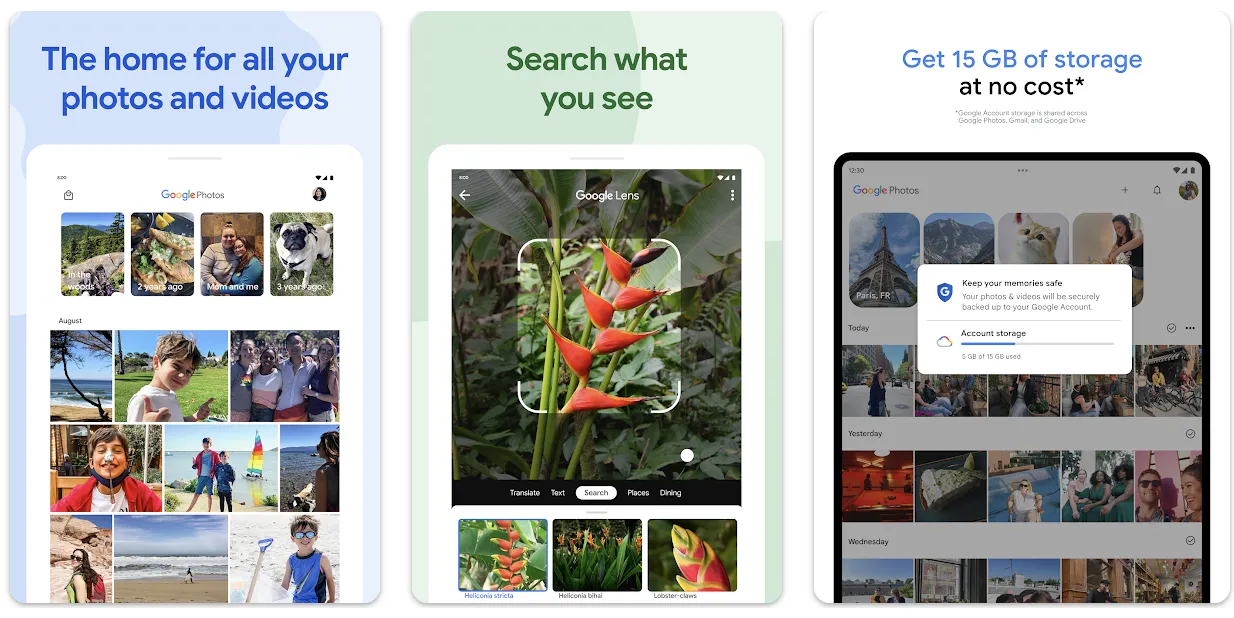

The feature currently lives in the Google app on Android and iOS, where it takes things a step further by giving you the ability to point your camera at something and ask questions about it out loud. What stands out is how Search Live lets you interact with the company's "custom" version of Gemini and search the web in real time, so you get a blend of reasoning and fresh web results without switching modes.

PRO TIP: Query fan-out can make Search Live feel fast. Traditional algorithms often handle one thing after another, while this parallel approach can serve up broader results in less time, especially helpful for complex, multi-part questions.

Beyond matcha: the real-world applications that matter

So what does this look like day to day? Search Live can guide you through hobbies, like explaining what all the tools in your matcha kit actually do. It is also useful when Search Live can become a science tutor, walking students through problems with visuals and step by step explanations.

For pure practicality, Search Live can help settle arguments on game night, explaining rules without flipping through instruction booklets. Diagonally or not? Point the camera at the board, ask out loud, keep the game moving.

The bigger shift shows up where text search falls flat. You cannot easily describe a weird dishwasher noise. With Search Live, the AI can hear it and offer targeted troubleshooting. The same goes for identifying mystery plants, poking at car problems by sound, or getting quick fashion advice while you stand in your closet with the camera up.

The technical reality: what works and what doesn't

Let’s be honest about the limits. Search Live's answers may vary in quality. Google added smart guardrails though. Search Live is designed to back up its answers with links, encouraging users to click through to more authoritative resources, which keeps Google a gateway to the wider web instead of a wall.

In our testing, Search Live excels at visual identification and straightforward facts. It stumbles on highly subjective queries or fast moving topics where accuracy changes by the hour. Complex multi step reasoning can hiccup, but the conversational back and forth makes it easy to clarify and try again.

There is also the AI Mode tie in. Google says Search Live operates in the background, letting you continue your conversation with the chatbot when navigating to other apps. For multi step projects, that persistence matters. Imagine a home improvement job where you pick up the thread all afternoon without restating context. I suspect this is where people will feel the biggest win.

Getting started with Search Live today

Ready to try it? You can try out Search Live in AI Mode by opting into the AI Mode experiment in Google Labs. Setup is straightforward, but a few limits apply.

The current rollout focuses on voice. Search Live is coming to AI Mode, but you'll only be able to interact with it using your voice for now. When your phone is up and the camera is on, speaking feels natural anyway.

Here is the big catch for the full vision. The test currently doesn't support camera-sharing, but Google plans to add the capability in the "coming months". So for now you get the conversational AI without the live visual layer, a strong preview rather than the whole package.

One practical perk remains. Google will save past Search Live conversations in your AI Mode history, so you can return to earlier threads and build on them over time, handy for ongoing projects or slow burn learning.

Where search is heading next

Bottom line, Google has a vision where your phone is a window that its AI can look out of and answer all your questions. This is not just convenience. It is a shift in how we access and verify information.

The ripple effects matter. Search Live will show you links during your conversation, preserving the web’s connective tissue while layering in a conversation you can steer. That balance could keep the broader ecosystem healthy and make searching easier for anyone who finds traditional queries frustrating.

As Google is building Search Live into AI Mode, allowing you to have a back-and-forth voice conversation with the company's AI chatbot right from its search engine, we are likely seeing the early shape of a new default for discovery. The matcha demo feels playful, sure, but it hints at something bigger, a future where getting answers is as easy as asking.

What stands out is how this positions Google in the AI assistant race. While companies like OpenAI and Anthropic focus on general purpose chatbots, Google leans on its access to live web information to deliver something grounded and immediately useful. The mix of conversational AI, visual understanding, and real time search may be the formula that makes assistants feel indispensable for everyday tasks.

Comments

Be the first, drop a comment!