Long before Nvidia figured out how to embed neural networks in its graphics processor units (GPUs) for driverless vehicles, it and other chipmakers were already making the same kinds of devices for 3D games and other apps.

GPU makers, including AMD, and to a lesser extent, Intel, as well as Nvidia remain in a decades-long horserace to pack their chips with more powerful GPUs. This collective development effort, in turn, gives game developers better processor hardware to design more sophisticated games. Specific to driverless applications is the continued cross pollination between GPUs for games and the GPU-based computing platforms that power self-driving cars.

Here are a few technologies that continue to see improvements for both GPUs for gaming and driverless cars.

Parallel Computing That Can Handle a Lot of Stuff

Separate instruction sets for games are often programmed to work simultaneously thanks largely to parallel computing. Defined by when different computing processes run simultaneously, parallel computing allows for several computing-intensive applications to run on the GPU all at once. In a game, thousands of different computing threads can run simultaneously, while the more robust the GPU, the better the game will run.

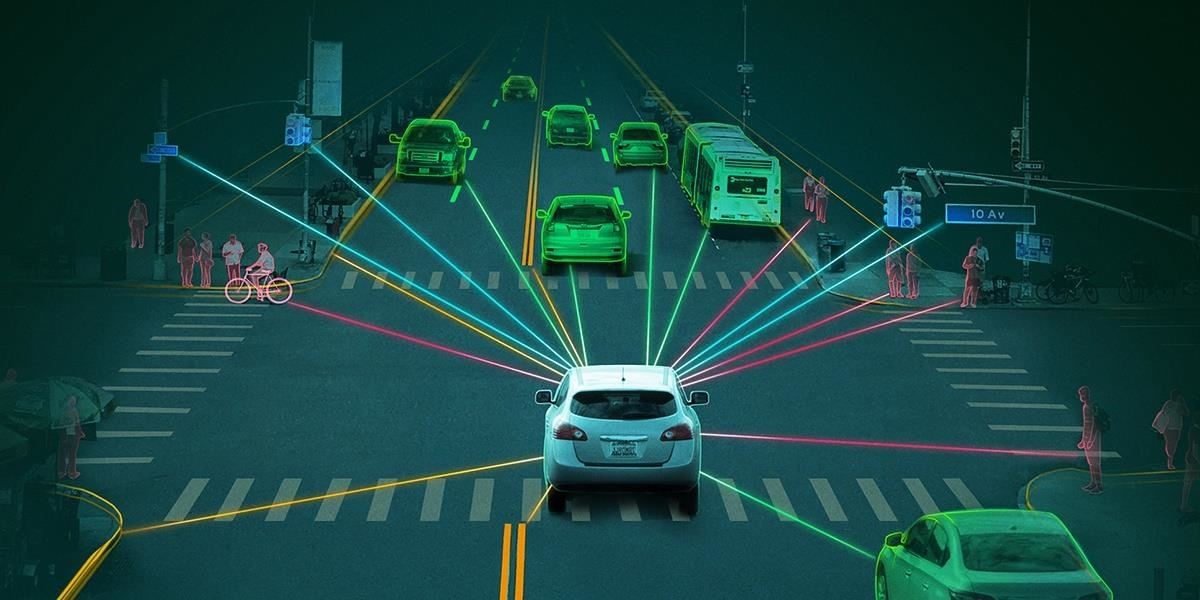

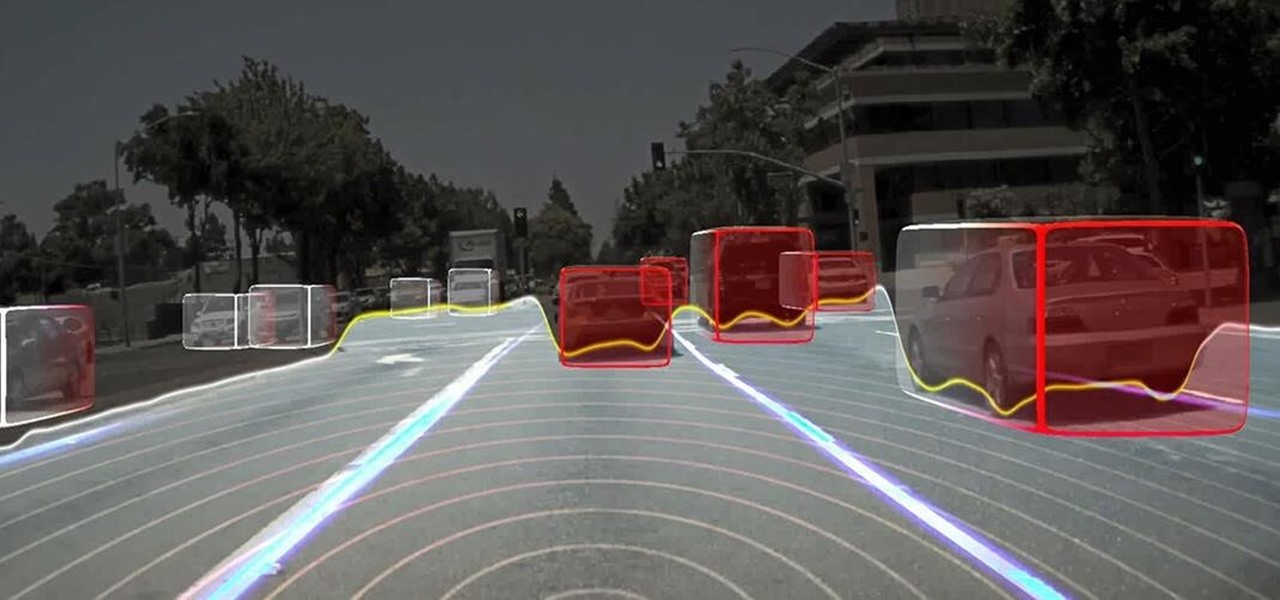

For driverless cars, massive amounts of data are streamed from sensors to GPU devices that serve as the central computer for driverless cars. The GPU must process numerous codes and instruction sets from a varied of input data with the same underlying parallel computing structure used to run games.

The computing power required to run the massive amounts of code also needs chip hardware that can handle the data-intensive tasks. For that, multiple device cores, which in many ways serve as separate computing devices within the GPU, are required to handle the massive data-processing loads. One key difference is that GPUs for driverless car computer platforms must run on very low power. As a result, a high-end PC graphics card might have over 3,000 processor cores compared to just a few hundred cores in a GPU in a self-drive vehicle.

AI Intelligence

Great advances were made in the video games sector when developers were able to take advantage of artificial intelligence computing code that graphics processors were powerful enough to handle. Among other things, AI algorithms allow opponents to become that much smarter and craftier at annihilating gaming avatars in the latest versions of such all-time best-selling titles as Call of Duty.

AI development, in many ways, was the genesis of machine learning, although games still mainly involve more-rudimentary if-then commands, compared to the machine-taught AI that driverless cars have. However, mainstream games have begun to take advantage of machine-learned AI, although to a lesser extent than driverless cars have.

Nvidia made a huge leap in its GPU development when it began to add neural networks for machine learning applications to its GPUs over five years ago. This coincided with when Google began leveraging the computational parallelism and graphics processing capabilities of GPUs for neural network machine learning. Nvidia met the needs of these new applications for Google as well as for Microsoft by offering GPU platforms that cost hundreds of thousands of dollars per system. Nvidia later applied these very high-end neural network GPUs to hardware designs for driverless applications and has since taken the predominate lead in GPUs for drivelers cars.

The Big Lead

AMD, Nvidia's chief competitor in the graphics space, has yet to enter the self-drive market as far as we know. An AMD spokesman also did not respond when queried about whether AMD plans to develop GPUs for driverless vehicles.

Intel, which also has a presence in GPUs although its devices are geared mostly for lower-end graphics, has emerged as a potential competitor following its purchase of Mobileye. It has been speculated that Intel and Mobileye may design a computer platform that offers neural networks that run on a CPU platform, but that remains to be seen. Intel and Mobileye also have yet to announce a major design win for driverless applications and did not respond to emails and phone calls when queried about their driverless plans.

Meanwhile, amid the hype, it is easy to overlook that Nvidia's driverless business represents less than 10% of its revenues. The GPU giant will thus has every reason to continue making chips for games and graphically intensive apps. At the same time, Nvidia will invariably draw upon its general 3D graphics knowhow from its gaming and other businesses to make better devices for its burgeoning self-drive vehicle customers. Its design wins include Tesla models sold today with Level 2 features and the recently released Audi Audi A8 with Level 3 capabilities. Nvidia now claims that virtually every driverless system in development today for Level 3 and more-advanced driverless systems uses its GPU.

In the near term, look out for some really cool eye candy graphics in games thanks to advances in GPU designs, while similarly designed devices, mostly from Nvidia, should serve as the computing platform that should replace human drivers in the driverless revolution.

Just updated your iPhone? You'll find new emoji, enhanced security, podcast transcripts, Apple Cash virtual numbers, and other useful features. There are even new additions hidden within Safari. Find out what's new and changed on your iPhone with the iOS 17.4 update.

Be the First to Comment

Share Your Thoughts